Microsoft recently introduced a groundbreaking AI model called VASA-1, designed to transform a single picture and audio clip of a person into a lifelike video where the individual appears to be lip-syncing, complete with facial expressions and head movements. This innovation is a result of training the AI model on AI-generated images from platforms like DALL·E-3, combined with audio clips to produce videos of talking faces.

While drawing inspiration from technologies developed by competitors such as Runway and Nvidia, Microsoft’s approach, as outlined in their research paper, claims superiority in terms of quality and realism, significantly outperforming existing methods. The model can seamlessly process audio of any length and synchronize it with a corresponding facial animation, demonstrating its versatility and adaptability.

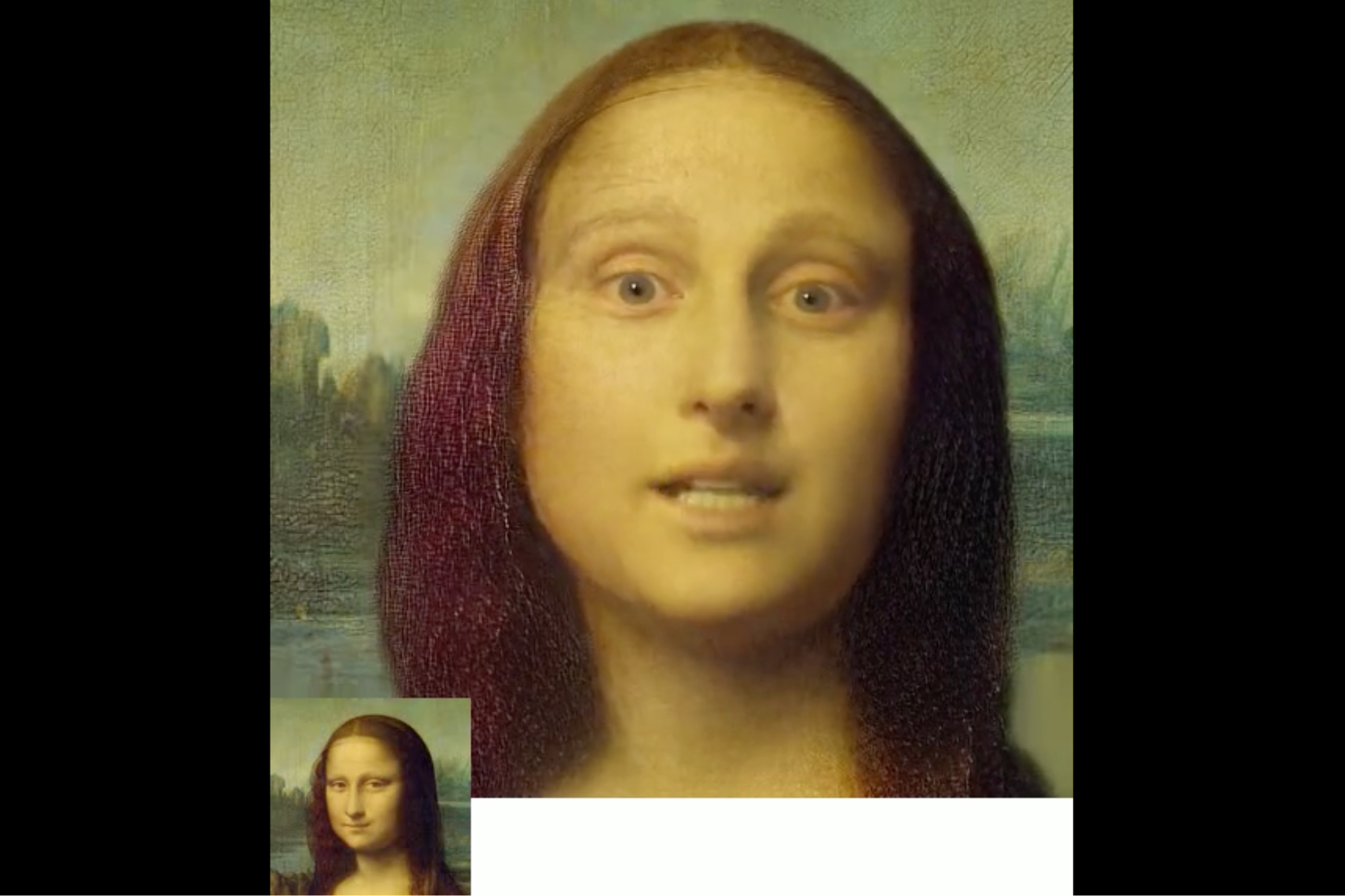

Remarkably, the AI model showcased its capabilities beyond conventional training data by successfully manipulating iconic images like the Mona Lisa, making it lip-sync to Anne Hathaway’s “Paparazzi.” This demonstrates the model’s proficiency in handling diverse inputs, including artistic photos, singing audios, and speech in various languages.

Mona Lisa (Credits: Entrepreneur)

The researchers underscored the real-time functionality of the model, presenting a demo video illustrating its ability to instantly animate images with dynamic head movements and nuanced facial expressions. However, amidst the excitement surrounding such advancements, concerns about the misuse of deepfake technology emerge, prompting Microsoft to emphasize its commitment to ethical use and advancement in forgery detection techniques.

Despite the potential risks associated with deepfakes, the researchers highlighted the positive applications of their technique, such as enhancing accessibility and educational endeavors. This echoes broader discussions within the tech industry about responsible AI development and the need for proactive measures to mitigate potential harm while maximizing the benefits of technological progress.

Google’s recent demonstration of a similar research project further emphasizes the growing interest and investment in AI-driven media manipulation technologies, showcasing the potential for user-controlled video creation from static images. This ongoing innovation underscores the evolving landscape of AI research and its profound implications for various fields, from entertainment to cybersecurity.